Connect AI agents and workflowsto any function, deployed anywhere*

* Across networks, even behind private VPCs

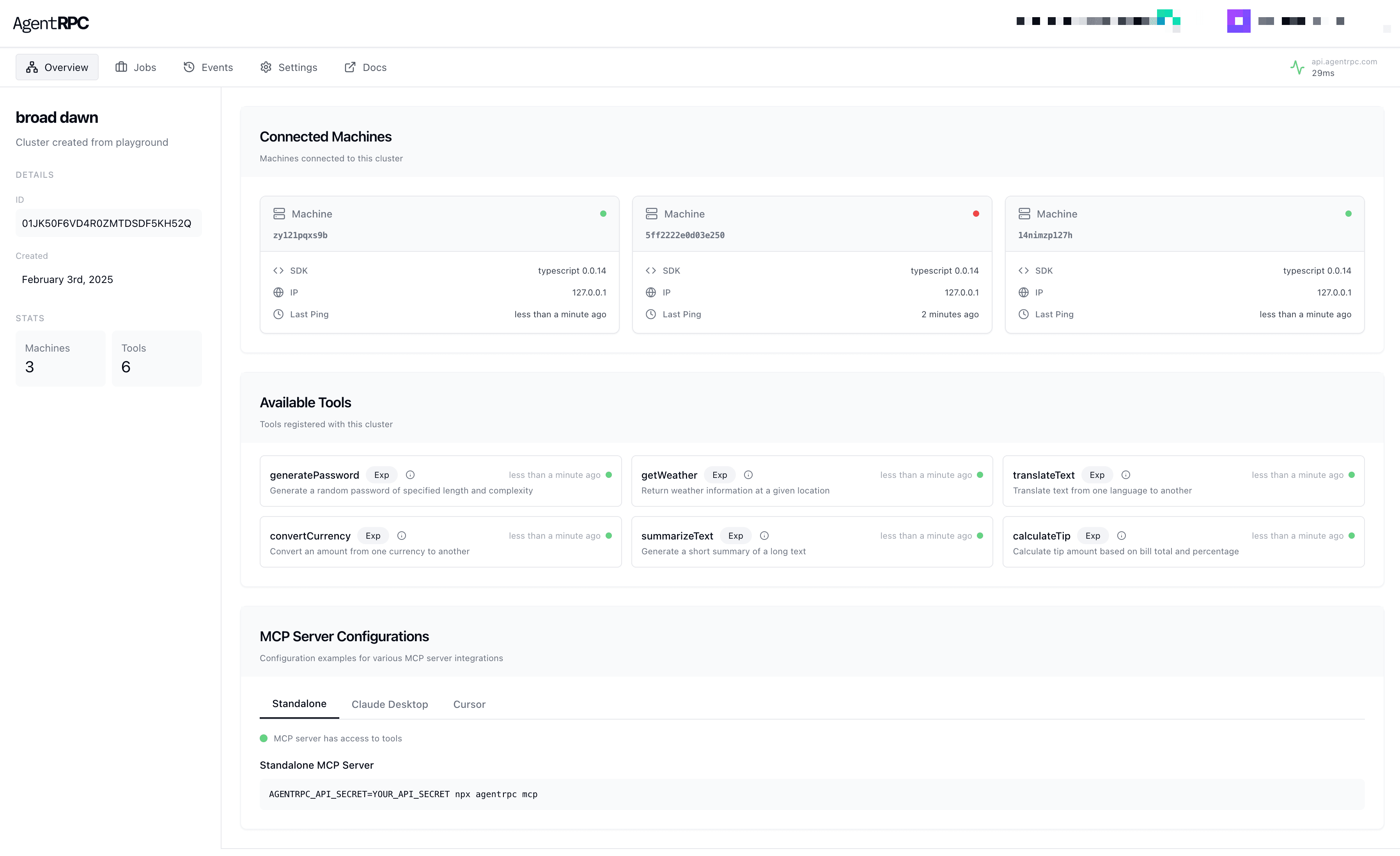

AgentRPC is a universal RPC layer that unifies all your tools from multiple programming languages into a single interface, with automated service discovery and routing.

> Quickstart

1. Register a Tool

// Loading...

2. Connect to an Agent

// Loading...

Powerful Observability for all your tools

The middleware for the agentic era

Connect Agents to tools in multiple languages. Currently Supporting TypeScript and Go (.Net comming soon).

Register functions and APIs even if they are in private VPCs with no open ports required.

Long polling SDKs allow you to call long-running functions and APIs beyond HTTP timeout limits.

Tracing, metrics, and events with our hosted platform for complete visibility.

AgentRPC platform keeps track of function health, does automatic failover, and retries.

Out of the box support for MCP and OpenAI SDK compatible agents.

Frequently Asked Questions

Suppose you're building an AI agent or an LLM automation. You want to extend the capabilities of the agent via tool calling. But the tools you want to call are hosted on a different service, maybe even in a private VPC. At this point, you'd either have to expose the private service to the public internet or build a custom integration. AgentRPC gives you a third option: register a function with our native SDK, and get a OpenAI-SDK compatible tool definition.

When you wrap a tool and call listen(), AgentRPC will register that tool with api.agentrpc.com. The SDK will periodically ping to check for new calls, and the tool will be called when a new call is detected.

A few advantates. First, you have to build the API and the service discovery mechanism for the Agent (figuring out which endpoint to call). With AgentRPC, your function becomes universally discoverable by authenticated clients without hardcoding the endpoint or building a custom HTTP server. Second, HTTP APIs have HTTP timeouts, so your tools have to be short running. AgentRPC tools can be long running, because it uses a async polling mechanism. Third, you have to ferry the tool definition for each tool to the agent. AgentRPC provides SDKs to co-locate the tool definition with the function, and generate OpenAI SDK compatible tool definitions at the point of use. There are a lot of other benefits, including caching, automatic retries, and more.

We are a managed service provider. We offer a hosted platform for AgentRPC that includes tracing, metrics, and events, and a set of SDKs to help you register tools and consume them.

Tools are executed on your infrastructure. AgentRPC is a middleware that sits between your agent and the function running on your infrastructure.

Once you register your function, AgentRPC will start polling api.agentrpc.com to check for new calls periodically with a long polling mechanism. This way there are no open ports or firewalls to configure.

If your Agent is simple enough that it can call the functions directly within the same process, then AgentRPC is not for you. AgentRPC is designed for Agents that need to call external functions in distributed environments. If you have low-latency requirements, your use case might not tolerate the additional network hop of routing through AgentRPC.